The artificial intelligence landscape is rapidly evolving. A significant development comes from Fractal AI, a global AI and analytics firm based in Mumbai, India. They have released Fathom-R1-14B, a groundbreaking open-source AI reasoning model.

This model marks a pivotal moment for accessible, high-performance AI. It is a key part of Fractal’s ambitious “Project Ramanujan”. This initiative aligns with the broader IndiaAI Mission.

Fractal’s CEO, Srikanth Velamakanni, views it as “just a tiny proof of what’s possible”. This hints at plans for a larger 70-billion parameter model. It signifies India’s commitment to AI self-reliance.

Notably, the model’s post-training cost was an incredibly low $499 USD. This makes advanced reasoning AI more widely available. Its full open-source nature, including weights and training recipes, fosters global innovation.

Key Takeaways

- Fathom-R1-14B proves that high-performance AI doesn’t need high costs — it was trained for just $499 yet rivals top closed-source models.

- Its open-source nature and long context window make it ideal for solving complex reasoning tasks across education, enterprise, and research.

- India’s Project Ramanujan and Fractal AI are leading the way in democratizing advanced AI, showcasing the nation’s growing tech leadership.

Technical Prowess and Architectural Blueprint

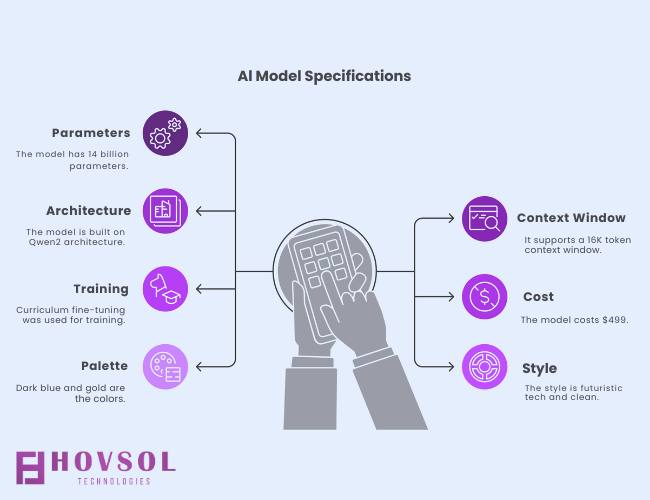

Fathom-R1-14B is a 14-billion-parameter language model, or approximately 14.8 billion parameters. It is meticulously fine-tuned for complex mathematical and general reasoning tasks.

The model is built on the Deepseek-R1-Distilled-Qwen-14B base. This foundation is a compact, efficient derivative of the Qwen family. It utilizes the robust Qwen2 architecture. If you’re unfamiliar with Deepseek AI, explore our guide on what Deepseek AI is and how it powers models like Fathom-R1-14B for deeper understanding

This architecture employs sophisticated self-attention mechanisms. These are crucial for understanding intricate dependencies in complex problems. This design aids in generating “concise yet accurate mathematical reasoning”.

A key feature is its 16,384-token (16K) context window. This capacity accommodates detailed problem statements and lengthy reasoning chains. It balances capability with computational efficiency during inference.

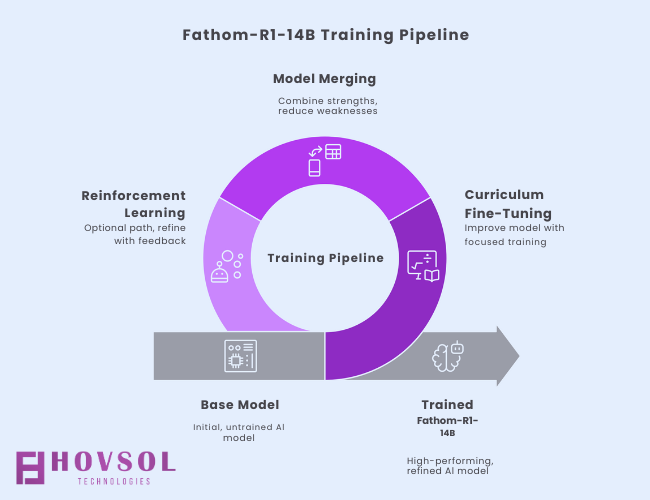

The model’s remarkable cost-effectiveness comes from its training strategy. This includes curriculum-based supervised fine-tuning (SFT). Training progresses from simpler to Olympiad-level problems.

Model merging from various fine-tuned versions was also utilized. An alternative, Fathom-R1-14B-RS, used reinforcement learning and cost $967 USD. Fractal open-sourced all training recipes and datasets.

Benchmark Performance and Comparisons

The proficiency of Fathom-R1-14B is validated by strong performance. On the challenging AIME 2025 exam, it achieved a Pass@1 accuracy of 52.7%. Its consistency@64 score reached 76.7% on AIME.

For the HMMT 2025, it scored 35.3% Pass@1. The consistency@64 for HMMT was 56.7%. Impressively, it achieved a perfect score (32/32) on IIT-JEE Advanced (Math) integer-type questions.

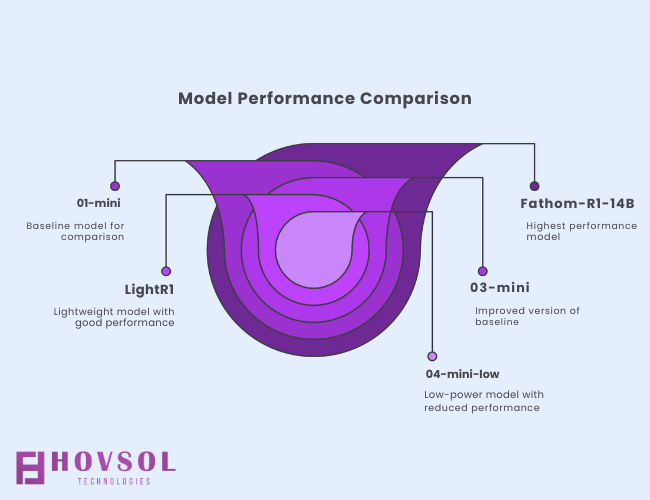

In comparative evaluations, Fathom-R1-14B demonstrates superior performance. It outperforms other open-source models such as o1-mini, o3-mini, and LightR1. More strikingly, its performance rivals some capable closed-source models like o4-mini-low.

This strong showing underscores that efficient design and training can yield top-tier results. It challenges the assumption that high-performance AI demands prohibitive costs.

Transformative Use Cases

The release of Fathom-R1-14B offers immense potential across various sectors. For developers, this open-source AI model provides a powerful tool for advanced reasoning. Its local deployability is a significant advantage for practical applications.

The readily available models, datasets, and training recipes empower researchers. This transparency simplifies experimentation and allows for further scaling and customization. It fosters innovation within the global AI community.

Enterprises, including the Fortune 500 companies Fractal serves, can benefit immensely. Fathom-R1-14B offers a highly cost-effective solution for complex analytics and intricate problem-solving. Its ability to handle long reasoning chains is invaluable for sophisticated business challenges.

Looking for tools that enhance content workflows powered by AI? Check out our curated list of the best AI writing tools of 2025 — several complement reasoning models like Fathom-R1-14B.

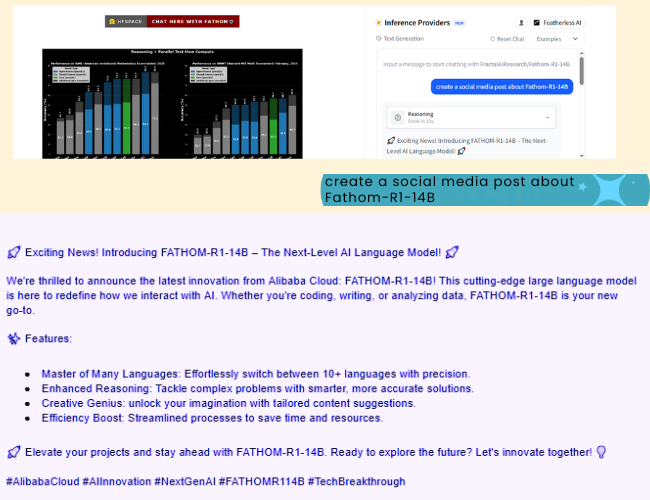

What can you do with just one prompt?

Here’s a live example of Fathom-R1-14B in action — we asked it to write a social media post, and the results were instantly usable:

This small test shows the model’s immediate utility for content teams, developers, and marketers exploring AI-powered creativity.

Try Fathom-R1-14B directly on Hugging Face: Link

The Future of Open-Source Reasoning AI

The unveiling of Fathom-R1-14B marks a significant milestone for open-source AI. It powerfully demonstrates that cutting-edge reasoning models can be both effective and widely accessible. This democratization of AI capabilities promises to accelerate innovation across the globe.

It further solidifies India’s burgeoning role in developing indigenous foundational large language models. The competition in the open-source LLM arena is undeniably intensifying. Fractal is clearly positioned at the forefront of this exciting race.

The future of AI for reasoning looks increasingly inclusive and dynamic. Through innovations like Fathom-R1-14B, high-performance AI will become more broadly beneficial. This model truly showcases what is achievable through intelligent design and efficient methodologies.

FAQs

Q1: What is Fathom-R1-14B and who built it?

Fathom-R1-14B is a 14B-parameter open-source reasoning language model developed by Fractal AI, a leading AI firm from India.

Q2: How is it different from other LLMs?

It's optimized for complex mathematical and logical reasoning, with a 16K token context window, and trained using cost-efficient strategies.

Q3: Can I use it for commercial projects?

Yes. Its open-source license and public training recipes make it ideal for commercial experimentation and deployment.

Q4: How much does it cost to train such a model?

Fathom-R1-14B was trained for only $499 USD, which is significantly lower than most models of similar scale.

Q5: What’s next for Fractal AI?

The company hinted at a 70B+ parameter model in the future as part of its broader IndiaAI mission and Project Ramanujan.