AI assistants today are smart—like “write a song, summarize your inbox, and code an app” smart. But ask one to find a document in your Google Drive or ping your CRM for last month’s leads?

Total blank.

It’s a familiar story: the model’s sharp, but it’s got no clue what’s actually going on in your business.

Why?

Because AI, for all its brains, often has zero context.

That’s the catch. AI can talk a big game, but without a bridge to real-world tools and data, it’s still just guessing in the dark.

Now enter the Model Context Protocol (MCP).

Think of it as the AI world’s version of USB-C. One clean, universal standard that lets your AI plug into just about anything—from databases to apps to web services—without custom code, shaky integrations, or hours of developer frustration.

This article isn’t just giving you a surface-level intro. It’s a real-deal breakdown of why MCP actually matters, how it’s structured, how to work with it, and why it could be the most important tool your business hasn’t adopted yet.

Why Smart AI Still Feels Useless Sometimes

Imagine an AI that can eloquently write a sales pitch or debug code… but freezes when you ask it to pull up your latest sales report or update a contact in HubSpot.

It’s not that the model’s broken.

It’s because most AI models usually live inside digital silos. They don’t have a way to access the very systems that run your business—CRM, file systems, APIs. The result? Custom-built integrations that are fragile, expensive, and a nightmare to maintain.

It’s like trying to glue together a bunch of puzzle pieces from different boxes.

And the worst part?

It slows down everything.

Innovation stalls.

Teams waste time.

Your AI sits there, brilliant but boxed in.

Enter the Model Context Protocol (MCP)

This is where MCP steps in.

Short for Model Context Protocol, it’s an open standard that finally gives AI the equivalent of a universal plug. MCP is stepping up as a new, open standard that’s purpose-built to help AI agents communicate with your external data, tools, and systems. It’s the universal handshake between your AI and your business operations.

Not sure how MCP compares to traditional AI agent setups? Explore Agentic AI vs AI Agents.

Think of MCP like USB-C for AI.

Before USB-C, every device needed a different cable. Today, one plug handles power, video, and data. Easy.

MCP does the same thing for AI.

It’s a simple, powerful standard that connects AI agents (like ChatGPT, Claude, or custom assistants) to the external tools and data sources you already use.

Once you build an MCP server, any MCP-compatible AI can use it—no customization required.

No more one-off code. No more duct-taped APIs.

In short: MCP lets any AI talk to your files, databases, APIs, or apps—without building custom integrations from scratch every time.

This isn’t just another protocol.

MCP gives AI the power to understand and act on what’s happening around it—not just talk about it.

Why It’s a Shift in How We Build AI

The way we built digital systems in the past was simple and deterministic. APIs were designed with clear expectations: you ask for X, you get Y. Done.

But AI doesn’t work like that. Models now need to think, plan, and adapt in real time. They can’t just follow a set script—they need the freedom to explore options and choose tools dynamically based on the task at hand.

MCP is built with this reality in mind. It’s tailored for a future where AI operates independently, figures things out on the fly, and takes meaningful actions with minimal help.

At the Heart of MCP: A Smart, Simple Structure

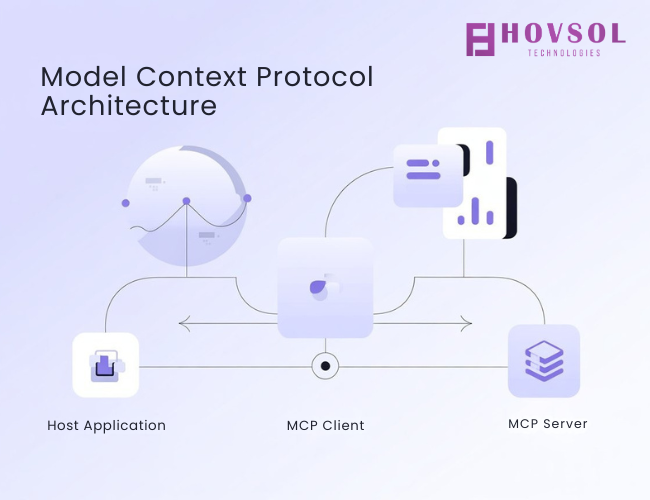

MCP is built to let AIs talk to external systems cleanly and efficiently. Under the hood, it’s a client-server setup that looks like this:

- Host Application: This is the app where users engage with the AI. It could be a desktop tool like Claude, a custom AI assistant, or even an IDE like Replit.

- MCP Client: This piece sits inside the Host. It connects to one or more MCP servers and acts as a translator, converting the AI’s thoughts into protocol-ready messages.

- MCP Server: This is a lightweight service that exposes functionality from your business systems—whether it’s a CRM, database, or cloud drive. Each server is focused on a particular area and speaks in MCP’s standardized language.

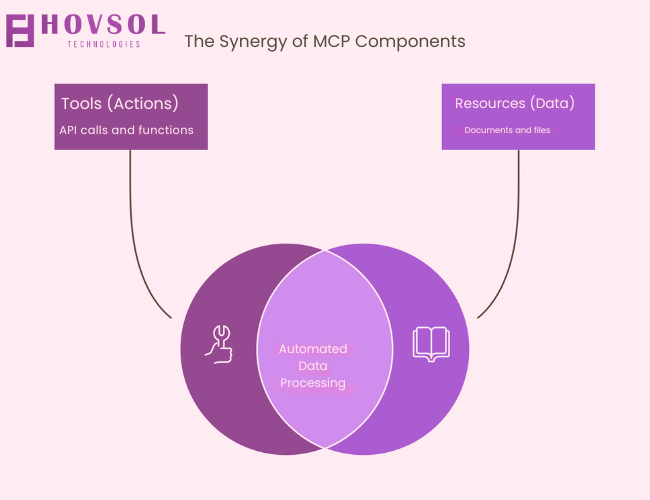

The Three MCP Primitives: Tools, Resources, and Prompts

Everything the AI does through MCP revolves around three simple building blocks:

Tools

These are the AI’s “action buttons.” They let it do things like send a message, query a database, or update a record. These tools are model-controlled—meaning the AI decides when and how to use them. Some examples:

- Create a new lead in your CRM

- Fetch customer data from a SQL database

- Send out an email or Slack message

- Trigger a payment or transaction

Tools do things—they cause changes in the external world.

Resources

These are pieces of context the AI needs to make smart decisions. Unlike tools, they don’t change anything—they’re just data the client pulls in and hands over to the AI. Things like:

- Reading a file or transcript

- Grabbing server logs

- Getting weather data

- Accessing a user profile

Resources give the model situational awareness without any side effects.

Prompts

These are templates or pre-written instructions that guide how the AI behaves. Think of them as scripts or macros—usually triggered by user commands. They’re useful for:

- Summarizing a doc

- Writing in a specific format

- Standardizing back-and-forth flows

Prompts make sure the AI stays consistent and efficient.

How the Whole Thing Comes Together

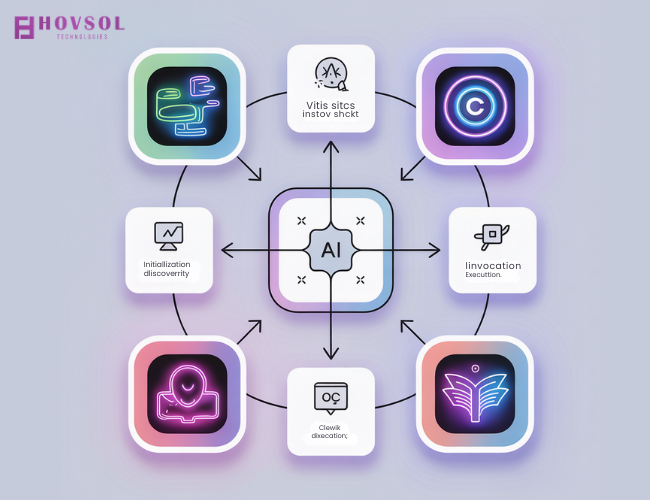

Every MCP interaction follows the same pattern:

- Initialization: The client connects to the server and asks what’s available.

- Discovery: The server sends back a list of tools, resources, and prompts with full details.

- Context: The AI is given the right resources and prompts so it understands what it’s working with.

- Invocation: The AI decides what to do and asks the client to trigger a tool.

- Execution: The server runs the tool or grabs the data.

- Response: The result is sent back to the client, which feeds it into the AI’s brain so it can act smarter moving forward.

Keeping It Light: How MCP Talks Tech

MCP uses JSON-RPC 2.0—a clean, lightweight way to send structured requests and responses. It’s designed to keep things tidy between clients and servers.

It also supports different ways of connecting: locally via stdin/stdout, over HTTP, or using server-sent events. Newer, even more flexible options are being added too.

Why MCP Isn’t Just Cool—It’s Critical

1. Standardization

Instead of creating new integrations for every single system, MCP gives you one way to connect everything. Your AI apps suddenly become modular, portable, and way easier to maintain.

2. Plug-and-Play Speed

Want your AI to access Google Drive, a SQL database, and your CRM? With MCP-compatible servers, you’re already halfway there. Just plug them in and go.

3. Real Autonomy

Forget basic scripts. With MCP, agents can fetch info, take action, and complete full workflows on their own—no babysitting required.

4. Lower Dev Time & Cost

No more maintaining spaghetti-code integrations. MCP cuts down on complexity and frees up your engineers to focus on big-picture work.

5. Predictable Behavior

Uniform request and response formats mean you get consistent, reliable results. Easier to debug, easier to trust.

6. Deeper Context

Resources and prompts give the AI rich background info, so it’s not just making guesses—it’s working with the full picture.

7. Build Once, Use Everywhere

One server module for file management or CRM tasks can be reused across apps and teams. Build it once, scale it forever.

8. Made for AI-Native Systems

This isn’t an afterthought. MCP is built specifically for agents that reason, act, and adapt—helping you build smarter systems from the ground up.

Rolling Up Your Sleeves: How to Build with MCP

Ready to dive in? Good news—there are plenty of SDKs out there in Python, TypeScript, Java, Rust, C#, Swift, and Kotlin, and more. You’re not starting from scratch.

Here’s the plan:

On the Server Side

- Define Resources: What data can you provide, and how should the AI use it?

- Define Tools: What actions can your system perform, and what do they look like?

- Create Prompts: Templates that streamline common requests.

- Set Up Logging: Track every request and result to make debugging easy.

- Choose a Transport: Local or remote—whatever fits your needs.

On the Client Side

- Connect to Servers: Initialize and manage connections to all your MCP servers.

- Translate Queries: Convert AI’s intent into proper MCP calls.

- Surface Info in the UI: Let users trigger AI actions with context-aware interfaces.

Quick-Start Blueprint

- Scaffold a Server: Use an SDK in your preferred language.

- Define JSON Schemas: Be explicit about what your tools and resources expect.

- Use Templates: Make it easy for the AI to fill in the blanks using dynamic URIs.

- Secure It: Set up proper transport layers for safe communication.

Real-Life Use Case: AI Filing Jira Bugs

Say you are a mid-sized SaaS company that has a problem:

“Support reps were drowning in manual bug reports. Your LLM could understand complaints but couldn’t file tickets.”

You want your AI to turn user complaints into bug reports—automatically.

You could build a Jira MCP server with a create_ticket tool. Now:

- Set up a Jira MCP server with a create_ticket tool.

- Let the AI discover it using tools/list.

- Use error logs or messages to generate tickets with tools/call.

No one writes a line of Jira-specific logic again. Just clean, AI-friendly integration.

Result?

A significant drop in support time, and a way happier team.

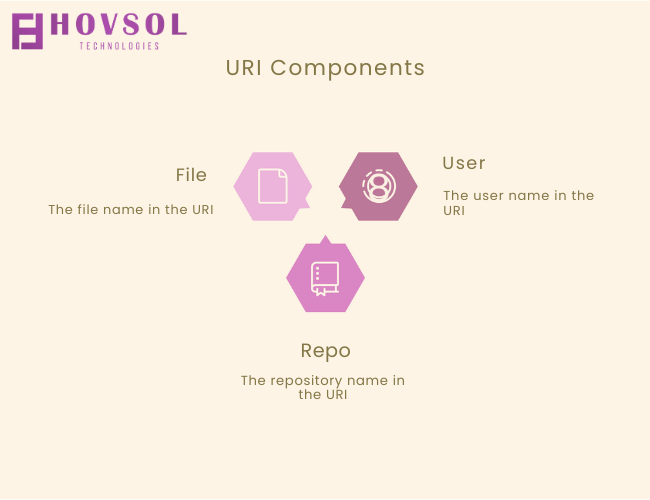

Resource Templates = AI on Autopilot

Resource templates let the AI fill in dynamic fields based on the conversation. For example:

repo://{user}/{repo}/{file}

If someone asks for “Q3 sales report in marketing,” the AI knows to look for “Q3” and “marketing” and build a URI to fetch the right file.

No hardcoded paths. Just smart, flexible access.

Debugging Tips: Keeping It Smooth

- Use Logs: Trace every call and result inside your server.

- Check UI Indicators: Tools like Claude Desktop show which servers are connected.

- Try MCP Inspector: A hands-on tool to test and troubleshoot servers.

- Handle Errors Properly: Let the AI receive, learn from, and respond to failures.

Know the Limits: MCP Is Just One Layer

MCP isn’t a full-stack platform—it’s a protocol. That means you’ll need to build your own solutions for:

- Identity & Permissions

- Version Control

- Monitoring

- Governance

Think of MCP as the foundation. You still need the walls, roof, and plumbing.

Security Still Matters

MCP supports OAuth and session control, but real enterprise-grade security needs extra layers. That includes:

Rigorous Session Management

Essential for secure communication between client and server, especially for remote connections.

OAuth 2.1

This is now mandated for remote HTTP servers, significantly enhancing secure connectivity. However, broader solutions for federated identity and token-based access are still needed for seamless enterprise adoption across diverse systems.

LLM Guardrails

Beyond the protocol, robust LLM guardrails are critical at the application level to prevent prompt injection attacks that could lead to malicious tool invocation or data exfiltration.

You’ll need to build in those guardrails yourself.

Heads Up: It’s Still Early Days

MCP is evolving fast, but it’s not universally adopted yet. The docs are improving, the ecosystem is growing, and more companies are jumping in—but there’s still a learning curve.

Also, your AI model needs to be smart enough to actually use the data. Simpler models might fumble here.

Looking Ahead: Where MCP Is Going

A Burgeoning Ecosystem

Developers are creating servers for tools like GitHub, Slack, Google Drive, and even cloud platforms like AWS.

Tool Registries Are Coming

Think app stores for AI tools—central directories where AIs can discover, verify, and use new tools on the fly.

Comparison Corner

- Custom Code: Replaced by clean, reusable modules.

- ChatGPT Plugins: MCP is more flexible, open, and continuous.

- LangChain & Friends: MCP isn’t a rival—it complements frameworks like LangChain beautifully.

What MCP Unlocks for the Future

Imagine AIs that can explore new capabilities in real-time, find tools they’ve never seen before, and integrate them on the spot. That’s what MCP and its registries make possible.

At HOVSOL Technologies, we see MCP as a game-changer. It’s accelerating prototypes, shrinking dev time, and helping us bring automation to every corner of business.

FAQs

What is the Model Context Protocol (MCP)?

MCP is an open standard for connecting AI applications with tools, data sources, and systems. It defines a client-server architecture using JSON-RPC to enable seamless, scalable AI integration.

How is MCP different from APIs or plugins?

Unlike hardcoded APIs or proprietary plugins, MCP offers a flexible, standardized protocol. It supports dynamic discovery, tool invocation, and contextual reasoning, making it far more adaptive.

Can I build my own MCP server?

Absolutely. With SDKs available in multiple languages, even small teams can define tools, resources, and prompts in MCP. It’s a great way to turn legacy systems into AI-accessible capabilities.

Is MCP secure?

MCP promotes local-first access, mandates OAuth 2.1 for remote connections, and encourages explicit user approval. But broader security measures—session control, logging, observability—are up to you.

Who is using MCP today?

Major players like Anthropic, OpenAI, Microsoft, Salesforce, and Replit are adopting or experimenting with MCP. Open-source contributors are also rapidly expanding the ecosystem.

What’s the biggest benefit of using MCP?

Reusability. You build a tool once, and every AI app that supports MCP can use it. That reduces dev time, boosts innovation, and future-proofs your AI strategy.

Final Thoughts

MCP isn’t a buzzword anymore.

It’s a practical, scalable way to finally make your AI useful in the real world.

But here’s the deal—it’s a protocol, not a silver bullet. You still need to build the governance, security, and infrastructure around it.

If your AI still depends on duct-taped APIs and isolated scripts, you’re playing yesterday’s game. MCP gives you a path forward—where your AI acts, thinks, and connects.

Table of Contents

- Why Smart AI Still Feels Useless Sometimes

- Enter the Model Context Protocol (MCP)

- Why It’s a Shift in How We Build AI

- At the Heart of MCP: A Smart, Simple Structure

- The Three MCP Primitives: Tools, Resources, and Prompts

- How the Whole Thing Comes Together

- Keeping It Light: How MCP Talks Tech

- Why MCP Isn’t Just Cool—It’s Critical

- Rolling Up Your Sleeves: How to Build with MCP

- Quick-Start Blueprint

- Real-Life Use Case: AI Filing Jira Bugs

- Resource Templates = AI on Autopilot

- Debugging Tips: Keeping It Smooth

- Know the Limits: MCP Is Just One Layer

- Security Still Matters

- Heads Up: It's Still Early Days

- Looking Ahead: Where MCP Is Going

- What MCP Unlocks for the Future

- Final Thoughts